Right: A technician-artist working on the set of “The Boxtrolls” (2014). / modules it stores (which are each a registered submodule of the. / performing a transformation on the Sequential applies to each of the. / it allows treating the whole container as a single module, such that. Center: The two evil and dangerous aunts from “Kubo and the Two Strings” (2016). /// a Sequential provides over manually calling a sequence of modules is that. It then chains outputs to inputs sequentially for each subsequent module. The forward () method of Sequential accepts any input and forwards it to the first module it contains. Alternatively, an OrderedDict of modules can be passed in. Left: Coraline in the Other garden from “Coraline” (2006). Modules will be added to it in the order they are passed in the constructor. Laika Studios in Portland Oregon has created some wonderful stop-motion movies - sequential art. Do we really need this feature that will bloat up our codebase?”

Pytorch nn sequential call code#

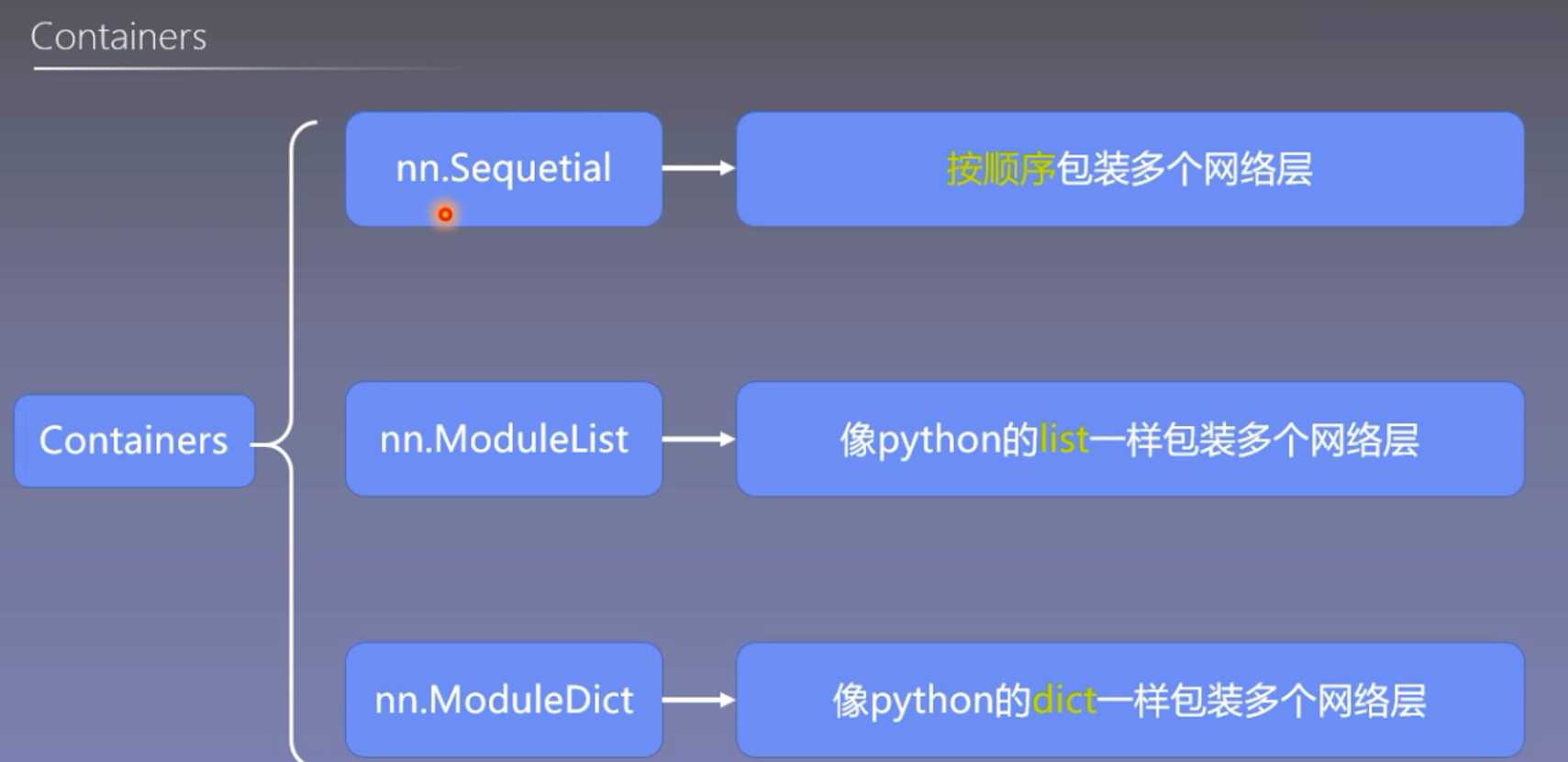

This often happens with open source code libraries where anybody can toss code in, and there’s nobody in overall charge saying, “Wait a minute. In my opinion, one of the biggest design weaknesses with the PyTorch library is that there are just too many ways to do things. The equivalent network using the Sequential approach:

The Module approach with Xavier uniform weight and zero bias initialization: The examples show that as networks get more complex, the Sequential approach quickly loses its simplicity advantage over the Module approach. Load the model weights (in a dictionary usually called a state dict) from. Here are Module and Sequential with explicit weight and bias initialization. When loading a pretrained model in PyTorch, the usual workflow looks like this. Notice that with Module() you must define a forward() method but with Sequential() an implied forward() method is defined for you.īoth of the examples above use the PyTorch default mechanism to initialize weights and biases. However, for non-trivial neural networks such as a variational autoencoder, the Module approach is much easier to work with. If you’re new to PyTorch, the Sequential approach looks very appealing. The exact same network could be created using Sequential() like so: The Module approach for a 4-7-3 tanh network could look like: The difference between the two approaches is best described with a concrete example. The Module approach is more flexible than the Sequential but the Module approach requires more code. You can use tensor.nn.Module() or you can use tensor.nn.Sequential(). Somewhat confusingly, PyTorch has two different ways to create a simple neural network.

0 kommentar(er)

0 kommentar(er)